3 things we got wrong during our first user testing

RocketVisor is launching our all-new Visor this spring. The Visor lets marketing, sales, support, and success teams collaboration on accounts together. It does this by adding thoughtful artificial intelligence to Google Chrome to anticipate your next action. The first launch will focus on sales professionals. This redesign comes after 18 months of learning through MVP iterations.

Alpha testers fell in love with our first app, Whiteboards, because of it’s simplicity. How do we maintain that simplicity while building an enterprise-grade account collaboration suite?

Our customers are busy and overwhelmed by tools. Because the Visor adds to this stack, it must be easy to use. Even though we are redesigning the product based on user feedback, it’s impossible to be an accurate judge of the usability of something new we redesigned.

The only accurate way to learn is to test the product with real users. Prototyping software makes it possible cheaply to test certain parts of a product’s design before building it. My senior thesis was about the psychology of UX design, and I studied the product design process in a Product Management 101 class. But neither prepared me for how unexpected some of the real-world test results can be.

In some cases, the results seemed to contradict our interpretation of well-established usability principles. Here are a few learnings from our first two rounds of user testing:

1) Clever icons can reduce usability

Usability Principle:

Design products to use the least amount of text that gets most users to their intended goals.

Where it didn’t make sense:

Small, simple Visor apps are the main focus of the Visor. They work because the Visor automatically understands which account a salesperson is working on. When the Visor knows which account is active, the user can switch between a few lightweight apps with ease.

We decided to give each Visor app a clever name and cool icon. This was a mistake.

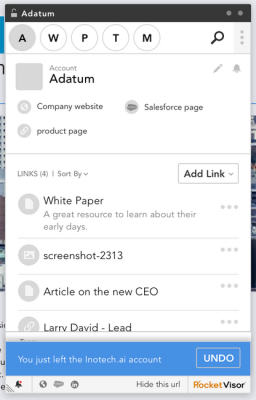

Our real icons were going to incorporate the app name’s first letter. For the prototypes, we used circular app icons with just that first letter of the app’s name. In the image below these are the round icons for About, Whiteboard, Pings, Timeline, and Missions.

During our first round, testers were unclear on what the circular icons meant. We’d ask them to find their whiteboard, and they’d generally go to the three-dot menu instead. Once we explained them what the W meant, the app icons made sense.

But that initial experience failed to convey the product’s main value. Some users thought the app icons were other accounts they could switch to.

We considered adding hover-hints. But that would likely be insufficient. The names of the apps didn’t describe what they did simply enough. Testers had a hard time guessing what we meant by “Pings” and “Missions.”

Before the next day of testing, we adjusted the prototypes. We replaced the icons with simple app names. We also changed from branded app names like “Whiteboards” and “Missions” to basic names like “Notes” and “Tasks.”

The results were immediately better. The next set of test users quickly understood the main value of the product. They also understood that they could switch between apps for a given account. The tab-like structure of the apps made more sense to them than the app bar.

Takeaway Number 1:

Icons can be great for well-understood concepts, like search. Otherwise, stick to simple self-descriptive words. At the end of the day, it’s better to be simple and direct than clever.

2) Don’t rely on text to guide users

Usability Principle:

Design products to use the least amount of text that gets most users to their intended goals

Where it did make sense

In one user story, we ask testers to invite a colleague who was not yet signed up for RocketVisor. Testers ran into a critical roadblock with the first design because we relied too much on text to guide them.

They were asked to invite a colleague named “Marzha Baker.” They found it easy to click the “Person with a Plus sign” icon to invite someone. Then, when a tester typed ‘Marzh…’ and no results were found, the response said:

“No results. Type their email address to add.”

Before: When no results were found for an invite search, the text suggested typing their full email address to add them.

Most testers saw just the “No results” part of the message and stopped reading. They thought they couldn’t invite Marzha. So about half of them immediately went for the X-button to close the invite dialog.

Because our product is a collaboration platform, inviting new users must be seamless. Not finding a colleague as an existing user cannot be a dead end.

Between the user testing sessions, we put together a new design that made the “Invite via Email” action much easier.

The results were immediately better. Users in the second cohort immediately clicked this button to continue the user story to completion.

Takeaway Number 2:

Beware of unexpected “dead ends” in the product. Don’t rely on a a set of text instructions. Provide clear calls to action buttons to avoid dead ends.

3) Simple words can have unintended meanings

Usability Principle:

Anticipate that users will make mistakes; make it easy to undo actions.

This principle also applied when the product is the one that made a mistake. Our product has an artificial intelligence component that guesses what a user might do next. When the confidence is high enough, it can even take that action for users. Sometimes these will not be correct, in which case the user should be able to easily undo the action.

Taking this quite literally, we designed a notification with an “Undo” function.

We were surprised to learn that testers were concerned what would happen when they pressed a button that said “Undo.”

It was particularly confusing to them since they didn’t actually do anything that was being undone. It was the product that did something.

We also learned the testers associate the word “Undo” with losing some type of work they did. Because it wasn’t clear what action of theirs was being undone, they were concerned about the impact.

For the next iteration, we changed the text to say “Return.”

These tests went much more smoothly. The testers knew exactly what to do and had no reservations clicking the button.

Takeaway Number 3:

User testing is as much about the copy as it is the layout and positioning of UI elements. When prototyping, pay attention to how testers interpret the meaning of your words.

Takeaway 4: You won’t become a great product designer just by reading articles like these

Usability design is far from a rules-based discipline. Knowing the principles simply isn’t enough. As we found during these tests, sometimes applying the principles will at first guide you in the wrong direction. Testing is the only way to know for sure whether your design is intuitive.

No amount of expert-studying or blog-reading is a substitute for getting out there and testing designs on real people. So what are you doing still reading? Get out there and test something.

Like to change people’s lives by designing beautiful, useful products?

So do we. And we’re hiring a full-time product designer in New York City.